- ProblemScaled a proof-of-concept into a GDPR-ready SaaS with AI tutoring, multiplayer quizzes, and zero cross-tenant data bleed.

- RoleFounding engineer & security lead

- Timeframe9-month refactor + rollout

- StackNext.js App Router • Express • PostgreSQL • Redis

- FocusNext.js • Node.js • PostgreSQL

- ImpactAPI latency: -40%

Problem

Scaled a proof-of-concept into a GDPR-ready SaaS with AI tutoring, multiplayer quizzes, and zero cross-tenant data bleed.

Context

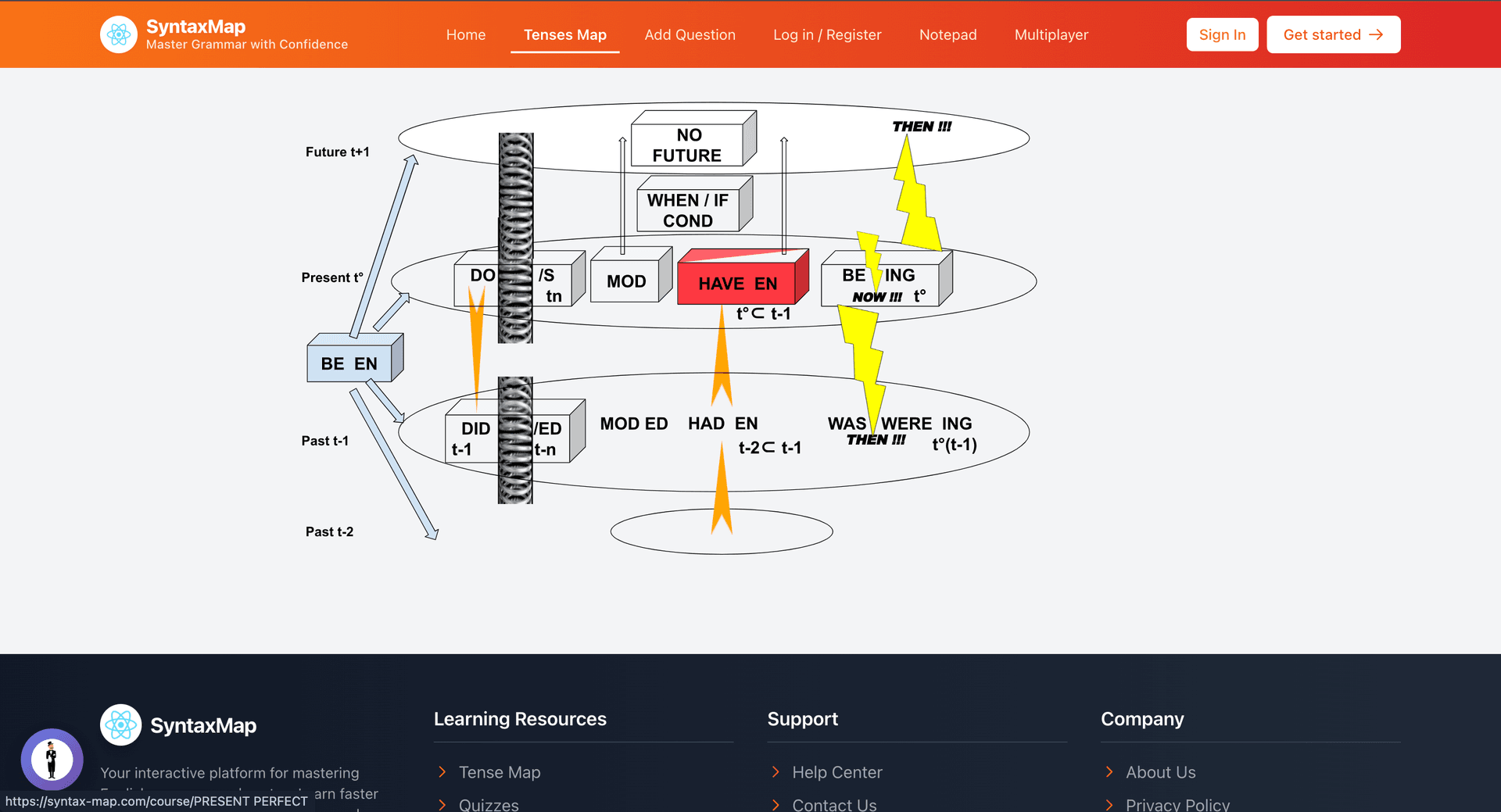

S — SyntaxMap started as a visual 'tense map' demo and quickly grew into a SaaS for schools wanting adaptive grammar coaching. Each institution required their own users, analytics, and AI settings, yet the original codebase was monolithic and insecure. T — Lead the architectural rewrite so the platform could handle concurrent multiplayer quizzes, AI tutoring, and GDPR-grade auditability without inflating release cycles.

Secure multi-tenant AI tutoring SaaS with Next.js and Postgres

Row-level security and tenant-scoped tokens prevent cross-tenant data bleed.

The stack supports AI tutoring, analytics, and real-time classrooms at SaaS scale.

Real-time multiplayer + AI workflows with strict validation

Socket.IO namespaces and server authority keep sessions deterministic under load.

Zod-validated contracts harden prompts and responses before they reach the LLM.

Architecture

- Introduced tenant-aware service modules with Postgres row level security + schema partitioning; every API token now embeds tenant + role context.

- Wrapped all AI interactions in Zod validators, prompt allowlists, and token quota guards before requests ever hit the LLM provider.

- Rebuilt the frontend with Next.js App Router, Suspense-friendly data hooks, and Zustand stores for deterministic hydration across client + server.

- Implemented Socket.IO namespaces per classroom with authoritative server timers, replay-safe move validation, and heartbeat pruning.

- Containerized the stack via Docker Compose (frontend, API, workers, n8n, Postgres, Redis) and codified env secrets using doppler/injectable vaults.

- Added GitHub Actions pipelines (lint, typecheck, Jest, Trivy, Gitleaks) plus preview deployments for curriculum stakeholders.

- Automated operational workflows via n8n: weekly cohort emails, guardian reports, and anomaly alerts when engagement dips below thresholds.

- Built analytics overlays showing AI tutor vs. instructor impact; results feed directly into school SLAs.

Security / Threat Model

- Shared schemas risked cross-tenant data exposure through sloppy joins.

- LLM prompts accepted raw markdown from instructors, opening prompt-injection attack vectors.

- Socket.IO rooms lacked cleanup logic, causing ghost sessions under load.

- Secrets for n8n automations lived in plain text, violating compliance.

- Tests covered <20% of code, making refactors risky.

Tradeoffs & Lessons

Security, pedagogy, and delight are not competing priorities when multi-tenancy is a first-class requirement. Guardrails (RLS, prompt hygiene, observability) freed the team to ship high-trust experience features instead of fighting fire drills.

Results

Latency dropped 40%, multi-school pilots ran 50+ concurrent multiplayer sessions sub-150 ms, and no cross-tenant leaks surfaced in chaos testing. The onboarding checklist shrank from a day of manual DB edits to a 6-minute CLI script. AI tutor engagement drove a 23% uptick in assignment completion, and the compliance package (DPIA, audit logs, data-retention plans) passed external review without rework.

Stack

FAQ

How is tenant isolation enforced?

Postgres RLS, tenant-aware services, and scoped API tokens prevent cross-tenant access.

How are AI risks reduced?

Prompt allowlists, schema validation, and usage quotas gate every LLM request.

How is real-time reliability handled?

Heartbeat pruning, server timers, and authoritative state keep sessions stable.